At annual conference with the FBI, KU crowd told to get ready for even more advanced ways to be tracked

photo by: Chad Lawhorn/Journal-World

Brian McClendon speaks at the FBI and KU Cybersecurity Conference on April 3, 2025.

Brian McClendon, the Lawrence native who became a founder of the system that powers Google Earth and modern mapping, lightly slammed his foot on the University of Kansas stage.

He told the crowd of about 400 cybersecurity experts from across the region that they should care about that faint thud because it was a precursor to the next “crazy” development that will make it easier for people to be tracked, even if they aren’t walking around with a phone in their pocket.

McClendon — a Lawrence resident, a KU engineering adjunct professor and 10 years removed from Google — told attendees of the FBI and KU Cybersecurity Conference on Thursday that researchers currently are developing ultra-sensitive sensors that can detect the slightest disruption in fiber optic cables that run beneath the ground.

That faint thud on the KU stage in the Kansas Union would create the slight break in the flow of light in the fiber optic cables that run throughout the building. With multiple sensors, you can triangulate the location of the thud, and that becomes a game-changer in the world of tracking people.

“That means without any phone in your pocket or any facial recognition, cars can be tracked, people can be tracked,” McClendon said. “It is coming. You can’t stop it, but it is good to be aware of it. And it is not the end.”

McClendon said similar sensors can track the signals of a room’s Wi-Fi system, measuring the “Wi-Fi reflections” in a way that allows movements in the room to be mapped. The world of advanced sensors that track hidden rays of lights and waves that surround us in modern society is coming quickly.

“All of these signals that we take for granted and carry around with us are eventually going to be mined,” McClendon said.

Then there are self-driving cars.

McClendon, who worked on advanced projects for Uber after leaving Google, said the self-driving cars that are being tested in markets like San Francisco and Austin are doing more than just getting people from Point A to Point B.

“They are a vacuum cleaner of data,” McClendon said, because each car is highly equipped with cameras and other sensors that are constantly watching the environment around them to create more advanced maps that power the self-driving car companies.

The result is that when a city has a few hundred self-driving cars operating in it, the area has a system of cameras and sensors in place that approaches what many cities have in China, which is largely considered the most active surveillance state in the world.

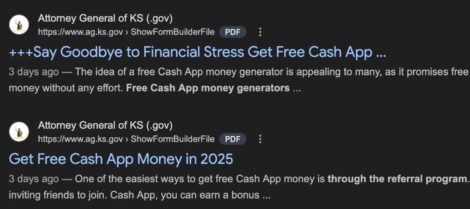

photo by: Chad Lawhorn/Journal-World

Brian McClendon, a creator of the program that became Google Earth, shows the crowd at the FBI and KU Cybersecurity Conference a photo of him in front of his boyhood home at Meadowbrook Apartments in Lawrence. In many applications, Meadowbrook Apartments is the default “center of the Earth” in the Google Earth program.

Thursday’s FBI and KU Cybersecurity Conference was the third annual event for the university, which is in the process of creating a new research center on West Campus that focuses on cybersecurity and national security threats. Thursday’s opening day included speakers from the FBI, the Department of Homeland Security and many attendees from the U.S. military. The conference continues Friday with a keynote address by a foreign intelligence officer and multiple executives in the cybersecurity field, among others.

While McClendon acknowledged his presentation to the KU audience had a few dark moments, it wasn’t all so. McClendon is a believer in the good that location data collection can create. He said you don’t have to look very hard to find examples of the good it is doing every day.

To prove his point, his presentation included a page from the Thursday edition of the Lawrence Journal-World. That page included two articles that both mentioned how such data helped public safety officials. One article detailed how a group of students used the location services on Snapchat to find their friend, who allegedly was being sexually assaulted. The other article detailed how a man crashed his vehicle and his iPhone automatically called dispatchers and provided his location.

McClendon told the crowd that the key to making sure such location data is useful rather than harmful in your life is to be aware of who you are sharing it with.

“The No. 1 thing you can do is don’t install apps from companies you don’t trust,” he said.

Admittedly, it can be tough to know who to trust in the world of apps, but McClendon said tough privacy regulations implemented in Europe are having an impact in the U.S., even though they haven’t been adopted here. That’s because most large app companies will want to access the European market, which means they’ve added the required European privacy features to their products. Most keep them in place for their U.S. versions. Look for features such as a permission button that must be activated before the app can start collecting any of your data, and another button that allows you to delete all your data from the app.

Even the most conscientious of tech users, however, aren’t likely to be aware of everything going on around them. McClendon shared with the crowd that he was involved in a project at Google in the early part of the last decade that nearly everyone would recognize but that few would have guessed its full purpose.

Users, even today, are frequently asked to prove they are not a robot by typing in a mishmash of letters they see on a screen. Sometimes that exercise also would include a picture that certainly looked like the address numbers hanging from a house or building. Users would be asked to identify those numbers as well.

In the case of Google, those indeed were actual address numbers from real locations. Google captured them as part of its program where vehicles drove through neighborhoods taking images for its street view program.

But there was a problem: Google’s computer programs couldn’t reliably read the numbers because every house or building seemingly had a different style of font for its address numbers. But humans are able to easily read the numbers because the human brain has learned the subtleties of different fonts. So, Google began including a photo of an address in addition to the mishmash of letters.

Correctly identifying the mishmash of letters was enough to convince Google that you weren’t a robot, but Google officials realized they could use the same human to determine the address number that its computer couldn’t read. For example, if one verified human said the address number was 527, Google would show the same picture to another verified human. If that one also said the number was 527, Google felt confident it now knew that address and could add it to its map.

“We did that 2 billion times,” McClendon said, “and mapped the world thanks to you.”

He told this to a crowd of cybersecurity professionals who ask questions for a living, and, indeed, one did at the level of a murmur: “Where’s my money?”

There was no answer, but, of course, the cybersecurity professionals know where it is not: The check is not in the (e)mail.